Fixing Trust In Our Systems, Online and in Person.

The biggest problem humanity faces today is that we have lost trust in our systems. How do we regain that trust? We build a new one.

The most important things to quantify are often the most difficult to. Should trust be one of them?

Think about all the things our society doesn't trust right now and the problems that the lack of trust is causing. It starts with our systems. Government, medicine, money, academia, religion, food, voting, media, police, and even science are all systems overwhelmingly lacking trust. When people no longer trust the systems that govern their society, it stunts the entire civilization from potential growth. To reach our fullest potential together, we must find ways to construct 'high-trust' societies, networks, or digital communities that can counteract and eliminate the pervasive corruption that has infiltrated almost all of our systems. This corruption is our biggest problem on Earth right now. Fixing trust starts with fixing these systems, and we have the opportunity right now to build new ones that make the old ones obsolete. So let’s consider it.

Why is trust so important?

Groups of people collaborating, cooperating, and sharing knowledge is arguably the most powerful force on Earth that we can generate. Think of nearly any amazing thing that has ever been built, and chances are that many people were involved. While some of those people were paid with money, forced into labor, or coerced with fear, it doesn't alter the fundamental premise: People collaborating is a powerful force. At The Society of Problem Solvers, we would argue that the more voluntary and freedom-enriched that collaboration is, the more likely an amazing outcome is probable.

In a free world, if groups of people trust each other, they can work together and achieve much more than if they spend most of their time either working alone or assessing each other's trustworthiness in the first place. The amount of energy spent on gauging trust could have been invested in building things, creating new knowledge, or solving problems together.

But when people hear about trust in the online realm, their minds immediately jump to fears of Social Credit Scores and CBDCs (Central Bank Digital Currencies), and these fears are justified. We should absolutely fear allowing centralized and powerful entities to issue us credit scores or have the power to dictate our lives with programmable centralized digital currencies.

However, these fears often lead us to overlook two things. First, is the difference between centralized and decentralized systems that we wrote about HERE. The difference between a centralized trust system and a properly built decentralized and transparent one is the same difference you would find between CBDCs and, say, Bitcoin. Sure, they are both forms of 'crypto,' but they are worlds apart. That's because of the 'Last Hand on The Bat' theory (which we also explain in depth in that same article HERE). Basically, who has the final control? A centralized entity like the Chinese Communist Party? Or a decentralized entity like all the individual nodes and owners of Bitcoin?

The second thing that these fears sometimes cause us to overlook is the fact that if we solved the trust problem that exists both online and in the real world, it would immensely facilitate our ability as humans and societies to embark on even bigger and more exciting projects together. If we are ever to become a more advanced civilization, we need a way to work together more effectively, and trust each other and our systems in a high confidence way. Trust is the cornerstone of creative collaboration.

Also, another major difference between centralized social credit scores (like what they have in China) and what could exist in a digital 'high trust' society is that the latter would be voluntary, while in China, participation is mandatory. Imagine you want to join a group of high-trust individuals working on a project together. Is there a way to voluntarily enter this project with trust quantified in advance? If you are a high-trust person, shouldn’t you want to be part of a high-trust society?

This concept can be a little tricky to grasp and often receives unwarranted pushback. So, let's shift gears for a moment. Think about this… There is a super fascinating and well-tested game theory called the 'prisoner's dilemma' that shows that for short-term games (or any kind of collaboration), if you are a scam artist, you will likely win. However, when you decide to engage in long-term games with other people - such as building companies, societies, systems, or governments together - long-term trust and cooperation will almost always win in the end. This long-term version is called the 'iterated' prisoner's dilemma, and the best-known solution for it is 'tit for tat.

‘Tit for Tat’ basically means that you want to cooperate with people, but if you get burned, you will punish others immediately for it, with room for forgiveness. One of our favorite thinkers on this subject is Naval Ravikant, and he explains here how he was able to build many successful teams and systems using trust, compound interest, and the tit-for-tat solution to the iterated prisoner’s dilemma to create long-term relationships with people:

If you want to really understand the iterated Prisoner’s Dilemma, we suggest this video. It is simple and clear and has great visuals:

If we are trying to work with others - both in person or online in groups such as Network States (like we wrote about HERE) or what we truly believe in, Collective Intelligence “Swarms” (like we wrote about HERE), we will always have that initial problem to solve: should we trust the other people involved? Do we trust the people in the group with us?

While tit for tat seems to work very well, we believe there is an even better solution to the iterated prisoner's dilemma. What if the prisoners had a way to know in advance how the other person had played long-term iterated games in the past? If one prisoner could see the other's history, it would influence their response to the dilemma. And if it were voluntary, people could avoid working with those who do not perform well in long-term iterated relationships.

In other words, what if the other players (and you) had trust scores that were visible to each other? Think of it like how on eBay and Amazon, both buyers and sellers have versions of this. Peer-to-peer trust scores based on previous interactions/iterations.

The idea of trust online even became a hotly debated topic recently between Elon Musk and Jordan Peterson on X (Twitter). We wrote about it back in July, HERE. Should anonymity be allowed online? Jordan's position was that transparency was key - people should use their real names online. This way, trust could be more easily built up. Elon countered by saying that due to censorship and potential issues - such as your boss disagreeing with your political views (and possibly losing your job as a result) - anonymity should be protected.

Our conclusion - which still stands today - is that maybe they are both right. You should be able to be anonymous online. However, a person who risks being vulnerable and is their authentic self will likely earn trust more quickly. An anonymous person will have to prove their trustworthiness more. In the end, both an anonymous person and a real person should be able to earn trust in an iterated prisoner's dilemma-type long-term game.

One interesting viewpoint - take Instagram and Finstagram (fake Instagram) accounts, for example. Studies have shown that on anonymous accounts - the fake ones - people are more likely to express their true thoughts online. Without the fear of being canceled, fired, or shunned by peers, individuals are more willing to speak openly. On real accounts with real names, people are more likely to engage in deception, present half-truths, and curate their feeds with images and stories that portray their ego and lives positively or self-fulfillingly. This is another reason why we believe that both anonymous and transparent individuals should be allowed in high-trust societies, because there might be tangible value in it.

Furthermore, being anonymous online is a contentious issue that divides people. Any solution to the trust problem online must start here in order to initiate a remedy. So can a high-trust society online include both authentically named and anonymously named users? If so, how?

We have been exploring interesting solutions to this problem, and nearly all of them revolve around a decentralized system for trust. A few of them are:

Trust Tokens - imagine giving crypto tokens to people who perform trustworthy acts for you. More on this in a moment.

Weighted decentralized trust ratings - similar to trust tokens, but the more trustworthy you are, the more value your tokens or ratings have when you give them to others.

Interview Processes for High Trust Societies - have randomly selected, already trusted members of the society interview new members in various formats, depending on whether the new member wants to remain anonymous or not. Maybe even need to pass a test or do an act for the community before being allowed in.

Trust Tests - Anyone who appears untrustworthy, such as new members of a high-trust society, could be subjected to random tests administered by other members until they reach a baseline level, allowing them to participate in missions or iterations with the rest of the network society.

Meeting in Person - mixing IRL (In Real Life) with URL (online). Create a “vouching” system where at the very least people can meet others in the real world and “vouch” that they are a real person. Ai and Bots would have a very tough time infiltrating this system with any level of trust as their networks would not connect to enough real people.

If we want to excel as problem solvers, builders, and knowledge creators, then trust is something we should constantly be on the lookout for. Because once we have trust, we possess a force. Think of it like a SEAL team, where each member has the others' backs. Of course, each high-trust society should set its own parameters, but solving this one problem resolves many.

Let's take, for example, the idea of 'Trust Tokens.' Imagine that we each carried a trust score with us on any social media platform, or other voluntary high-trust societies, network states, or online swarms, and the only way to increase or decrease that score was through people giving us tokens, similar to the scoring systems on eBay or Amazon. Each of us could be assigned a crypto wallet where these tokens would populate. However, we could only give them away; we wouldn't be able to save our own populated tokens. If we didn't distribute them within an agreed-upon time period for each one, they would disappear.

Let's say these were the coins

Every time you give a token to someone, it is added to their profile and trust score, and you can also include a written review, similar to what's done on eBay. For example, this might be a profile you encounter:

David Miller, as you can see, has 221 silver coins, indicating that 221 individuals gave him silver tokens for acts of trust online. Perhaps they participated in a Zoom business meeting with him, made a purchase on Craigslist, assisted in programming, or achieved a digital mission together. David also possesses 80 gold tokens, signifying trustworthy actions in person. Maybe he lent you money, or you attended a fund raiser with him, or his company did a well-done construction job for yours for a fair fee. All acts in person. Most importantly, by looking at this profile, very few people believe he is untrustworthy, and he received only three negative red tokens and one big mistake in the form of a poop emoji. Just like on Amazon or eBay, you can click on these individual tokens to read reviews, understand the reasons for each one, and identify the contributors. If the person providing a review seems untrustworthy, it devalues the negative feedback. Conversely, if they had a high trust score, it would enhance its value to the observer.

Of course, most people tend to click on the diamond and poop emojis first, often selecting the poop emoji to find out what particularly extreme negative behavior this person engaged in that was worthy of the rare poop emoji that you only get one populated in your wallet every 2 months to give away. In David's case, there was only one poop review, and here it is:

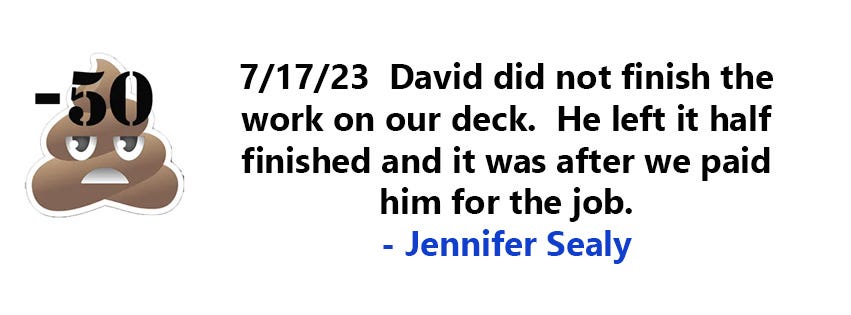

As we can see, it was written by someone who had a bad business interaction with David just like you might on Amazon or eBay. On eBay or Amazon almost all good users have a few bad interactions. The same is true everywhere. In a high-trust society, rational and reasonable forgiveness is necessary (just as error correction is necessary for growth). This is why the solution tit-for-tat in the iterated prisoner's dilemma would win, because it allowed for forgiveness. To provide even more insight into the trust dynamic here, we can also observe that David responded to the negative review by saying:

Furthermore, we could double-check this review. If it were on a transparent blockchain ledger, there would be an irrefutable way to verify it, but most people don't want to invest that much time. However, we could simply click on the person who left the review to understand their character.

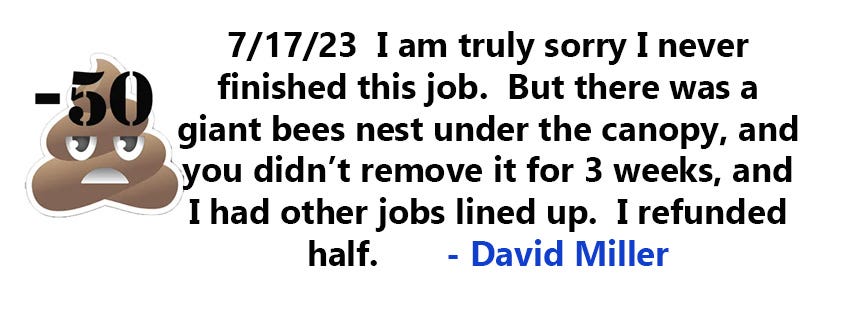

We can see here that Jennifer's trust score wasn't too high, and she had six poop emoji tokens of her own. When we clicked on them, we found a collection of Karen-esque behaviors. As it turns out, her negative rating of David might turn into a positive one if you were in this high-trust society and trying to decide whether you should trust David enough to engage in an iteration with him for whatever you have planned. If someone not trusted doesn’t trust you, it might not hurt you at all.

These kinds of systems could get messy if scorned lovers etc were allowed on them, so each system would have to decide what parameters were best for that system.

Let’s look at a few more mock profiles.

Mike here has barely had an iterations online and he already seems pretty untrustworthy. Dig in deeper and you can see things like this:

As we can see, this is a bigger issue than an incompleted deck, especially if you are trying to work with Mike Mcewel online. Also we can see the poop token was given by David Miller - and you only have 6 of these to give away per year. If we click on David’s name we see it is the same David Miller from the first profile, the one with a +791 trust score. Someone with as poor of a score like Mike Mcewel would likely be banned from many truly high trust societies.

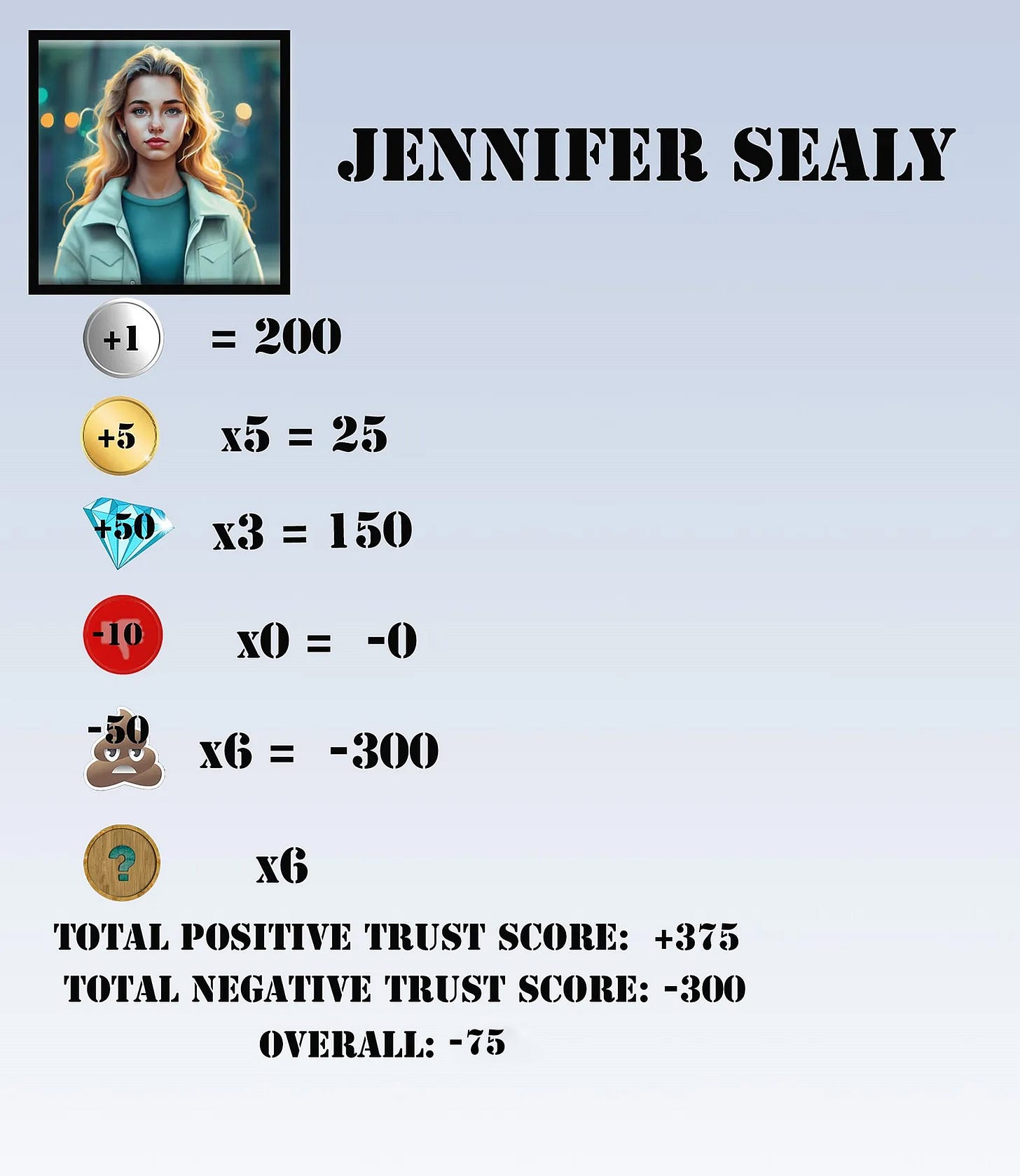

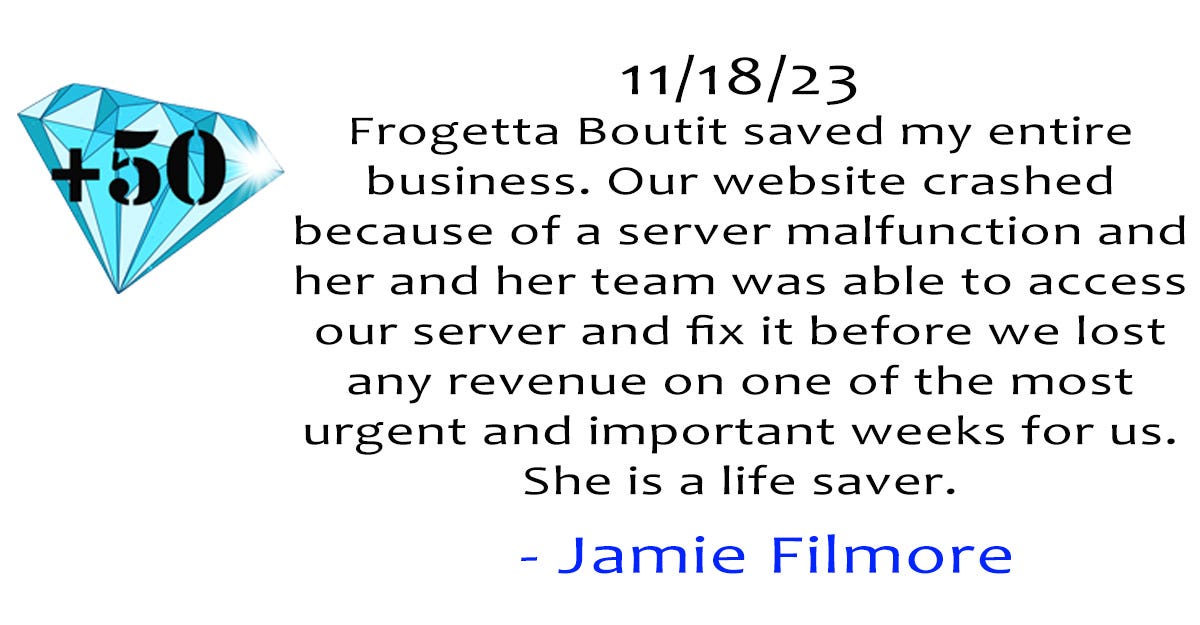

As for the anonymous people, let’s take Frogetta Boutit’s profile. For a person desiring an anonymous profile in a high-trust society, the hard part would be earning trust in the beginning. It would almost be like an initiation phase, or extra steps needed to earn enough trust in order to be able to collaborate.

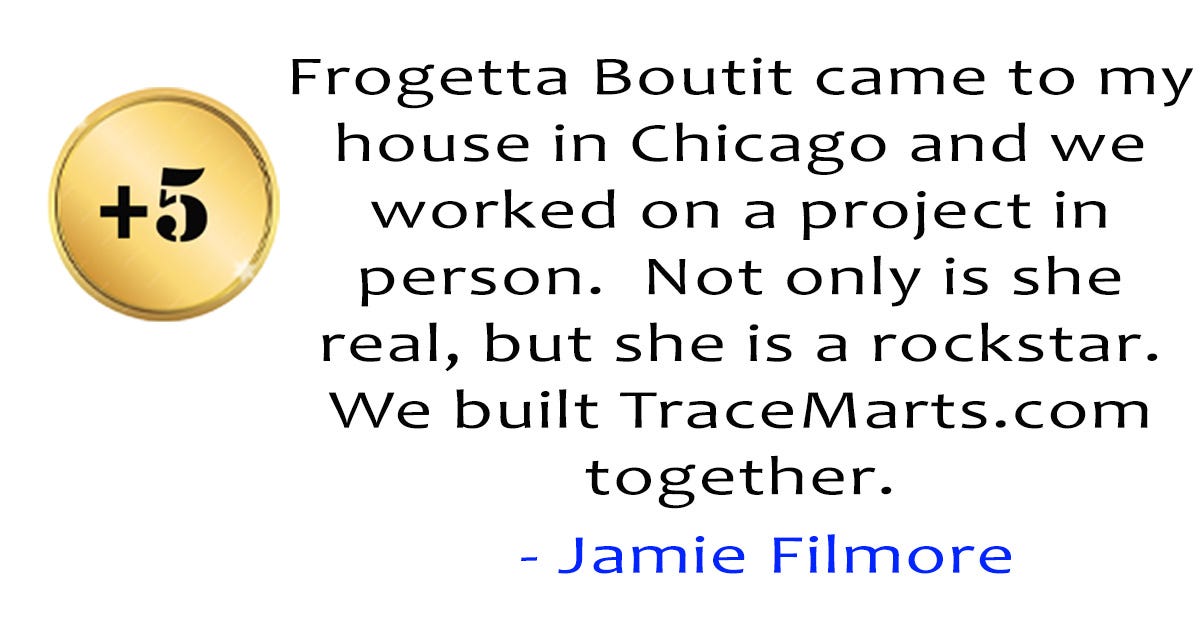

Some high-trust network states or swarms would likely have a series of trust tests, or force you to take a class and pass a test, or have to have someone(s) within the network vouch for you. But once that person gained some trust, they could continue to earn more like everyone else. Even though her real name is anonymous online, she still has a lot of gold coins which means she did acts of trust in person, which means that some people know her real identity. This adds trustworthiness to the system. We click on some of them and see these reviews:

As you can see, just because someone chooses to be anonymous online doesn't mean they can't disclose that alias to some of their closest real friends and still receive gold trust tokens for in-person acts. Anonymous individuals could also receive the rare diamond tokens, even for acts never done in person. However, the online act would have to be exceptionally worthy, like this:

As you can see, there are many ways to explore the concept of systemic decentralized trust, both online and in the real world. The challenges trust currently faces will not be rescued by our corrupt systems such as government, medicine, and media. We must build high-trust societies, network states, and digital communities on our own - from the bottom up - if we are to combat corruption and foster cooperation. Is trust tokens the answer? Transparent Swarms or Network States? Hard to say until we have tried and tested them all. But a high trust society is a better society than one where none of use trust the systems or each other.

Would you want to be part of a high-trust society?

Thanks for reading!

Problems are everywhere. Problems are soluble.

Humans solve problems better in high trust groups. We can test it and prove it.

Solving Problems Is Happiness.

*****

Thank you to all of the writers that make this possible. We are accepting guest writers, so if you have an idea for a solution, please reach out. Let’s solve it all, together.

For over 3 billion years on this planet there were only single-celled organisms. Then one day they somehow learned to work together and make complex multi-celled creatures . Right now we are like those single-celled organisms. Our next evolution is finding how to work together, better… (like we wrote about here).

#SwarmAcademy #UnifyAgainstCorruption #CleanSlate #ResultsMatterMost #DecentralizeEverything #DemandTransparency

COMMENTS ARE FOR EVERYONE AS A PLACE TO THINK-TANK SOLUTIONS. They will never be for paid-only subscribers and we will never charge a subscription.

My entire business functions on trust. I have entry codes to multi-million dollar homes because I have earned trust. To throw that away would be suicide. It requires self discipline, a code of ethics, and policing yourself.

I've walked into unoccupied bedrooms with thousands of dollars of jewelry laying around. But everyone has their price. Haha, I might not be so trustworthy if I saw twenty million dollars in cash laying around. There was a long time, well-respected and trusted home builder that eventually succumbed to temptation and began stealing money. It had a chilling effect on the whole local mortgage and home building industry because everyone lost trust.

High trust societies function much more effectively and efficiently, so should win out in a free market situation. But trust is a by product, not an end product. It follows naturally from a moral code adhered to. It doesn't necessarily have to be religious and probably wouldn't be in most of your proposed high trust societies, but I do think some sort of code of ethics would need to be placed front and center in each them so one could make a judgement before entering. For instance, I'm not going to join one that asks me to give all to the cause, no matter how much wealth it promises. Those have a way of turning into cults.

Overall a great post, and I appreciate your commitment to finding solutions. I feel like I can trust you. 😁

Loved this piece. I have been fortunate to have participated in two different types of trusted societies - the early tech industry startups and a rural ranching community. While differing in environments, goals and philosophies, these two shared the fundamental attributes of working together for the benefit of the groups involved as well as the overarching goal.

These were personally rewarding group interactions. Trust, a good work ethic, a strong sense of community and purpose and a willingness to push boundaries are all qualities that make these solutions possible.

Adding swarming - it seems like a beautiful combination. Nothing bonds a trusted group tighter than achieving successful group solutions.

Thank you for all your inspiring work. You have given hope to a nerdy cow wrangler.